[ad_1]

Fantastical notions of all-powerful robots, straight out of Hollywood, may come to mind when you think about artificial intelligence (AI). But set aside thoughts of the machines taking over: When it comes to your hearing aides, AI helps the devices function better.

For instance, AI can help wrangle one of the most challenging situations if you struggle to hear: Engaging in a conversation when you’re in a crowded, loud space (think: a restaurant or cafe). Because as you know if you wear a hearing aid, louder isn’t the solution.

From month to month, year to year, researchers are finding more ways to harness this technology and use it to improve hearing aids. Here’s what you need to know about how hearing aids use AI—and if a hearing aid with this functionality is right for you or a loved one.

Key terms: AI, machine learning, deep neural network

Put simply, artificial intelligence is defined as the ability of a machine to simulate human intelligence, performing a set of tasks that require “intelligent” decisions by following predetermined rules.

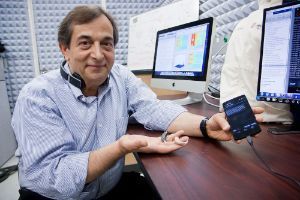

“Artificial intelligence is a very broad definition. Machine learning, neural network, deep learning, and all of those, fall under the AI umbrella,” says Issa M.S. Panahi, PhD, professor of electrical and computer engineering in the Erik Jonsson School of Engineering and Computer Science at the University of Texas at Dallas.

Through machine learning, a subset of AI, machines use algorithms (aka, a set of rules) to sort through giant amounts of data and make decisions or predictions.

Go one level deeper, and we get to the deep neural network (DNN): This form of AI is set up to mimic the neural habits of the brain, and aims to respond the same way your brain would, without being explicitly programmed how to react in a given situation.

You’re familiar with this technology if your inbox sorts emails into categories (important, promotional, etc.), if you take advantage of recommendations of “what to watch next” on streaming networks, or if you’ve marveled over self-parking cars. Some more mundane but important examples of deep learning include weather forecasting and credit card fraud protection. These tools have gotten much better in recent years due to deep learning.

How hearing aids use AI

“The AI that occurs in hearing aids has actually been going on for years, but it’s a slow burn to think about how that’s actually happened,” says Scott Young, Aud, CCC-A, owner of Hearing Solution Centers, Inc. in Tulsa, Okla.

Hearing aids used to be relatively simple, he notes, but when hearing aids introduced a technology known as wide dynamic range compression (WDRC) the devices actually began to make a few decisions based on what it heard, he says.

“Over the last several years, AI has come even further—it actually listens to what the environment does,” Scott says. And, it responds accordingly. Essentially, a DNN allows hearing aids to begin to mimic how your brain would hear sound if your hearing wasn’t impaired.

For hearing aids to work effectively, they need to adapt to a person’s individual hearing needs as well as all sorts of background noise environments, Panahi says. “AI, machine learning, and neural networks, are very good techniques to deal with such a complicated, nonlinear, multi-variable type of problem,” he says.

What the research shows

Researchers have been able to accomplish a lot with AI to date, when it comes to improving hearing.

For instance, researchers at the Perception and Neurodynamics Laboratory (PNL) at the Ohio State University trained a DNN to distinguish speech (what people want to hear) from other noise (such as humming and other background conversations), writes DeLiang Wang, professor of computer science and engineering at Ohio State University, in IEEE Spectrum. “People with hearing impairment could decipher only 29 percent of words muddled by babble without the program, but they understood 84 percent after the processing,” Wang writes.

Dr. Issa Panahi is working on smartphone

-based AI apps to help people with hearing

loss.

And at University of Texas at Dallas, Panahi, along with co-principal investigator Dr. Linda Thibodeau, used AI to create a smartphone app that can tell the direction where speech is coming from. This app calls on models built using a massive library of sounds to identify and diminish background noise, so people hear better. Place a smartphone with the app on a table, or rest it in the GPS stand in your car, and “clean speech is transmitted to the hearing aid devices or earbuds,” Panahi says.

“The importance of AI is it overcom[es] a lot of issues that cannot be easily solved by a traditional mathematical approach for signal processing,” Panahi says.

The app is not yet available to the public, Dr. Thibodeau says (code, demos, and more information are available on the website).

Neural-network powered hearing aids

In recent years, major hearing aid manufacturers have been adding AI technology to their premium hearing aid models. For example Widex‘s Moment hearing aid utilizes AI and machine learning to create hearing programs based on a wearer’s typical environments.

And this January, Oticon introduced its newest hearing aid device, Oticon More™, the first hearing aid with an on-board deep neural network. Oticon More was trained—using 12 million-plus real-life sounds—so that people wearing it can better understand speech and the sounds around them.

rechargeable.

In a complicated “sound scene”—picture a bustling airport or hospital emergency room—the Oticon More’s neural net receives a complicated layer of sounds, known as input. The DNN gets to work, first scanning and extracting simple sound elements and patterns from the input. It builds these elements together to recognize and make sense of what’s happening. Lastly, the hearing aids then make a decision on how to balance the sound scene, making sure the output is clean and ideally balanced to the person’s unique type of hearing loss.

“We wanted our system to be able to find speech even when it’s embedded in background noise. And that’s happening in real-time and in an ongoing basis.”

This improvement is especially key for speech in noise, explained Donald J. Schum, PhD, Vice President of Audiology at Oticon, during the product launch event.

“Speech and other sounds in the environment are complicated acoustic wave forms, but with unique patterns and structures that are exactly the sort of data deep learning is designed to analyze,” he said. “We wanted our system to be able to find speech even when it’s embedded in background noise. And that’s happening in real-time and in an ongoing basis.”

Do I need a hearing aid with AI?

Think of hearing aids as existing on a spectrum, says Young—hearing aids range widely in price, and some at the lower end have fewer AI-driven bells and whistles, he says.

He points out that some patients may not need all the features—people who live alone or rarely leave the house, and don’t find themselves in crowded scenarios often, for instance, might not benefit from the functionality found in higher-end models.

But for anyone who is out and about a lot, especially in situations where there are big soundscapes, AI-powered features allow for an improved hearing experience.

Listening effort is reduced

What “improvement” looks like can be measured in a lot of ways, but one key indicator is memory recall, Schum explained. It’s not that the hearing aids like Oticon More literally improve a person’s memory, he explained, it’s that artificial intelligence helps people spend less time trying to make sense of the noise around them, a process known as “listening effort.”

When the listening effort is more natural, a person can focus more on the conversation and all the nuances conveyed within.

“It’s allowing the brain to work in the most natural way possible,” he said.

[ad_2]

Source link